CMU Scientists Solve 90-Year-Old Geometry Problem

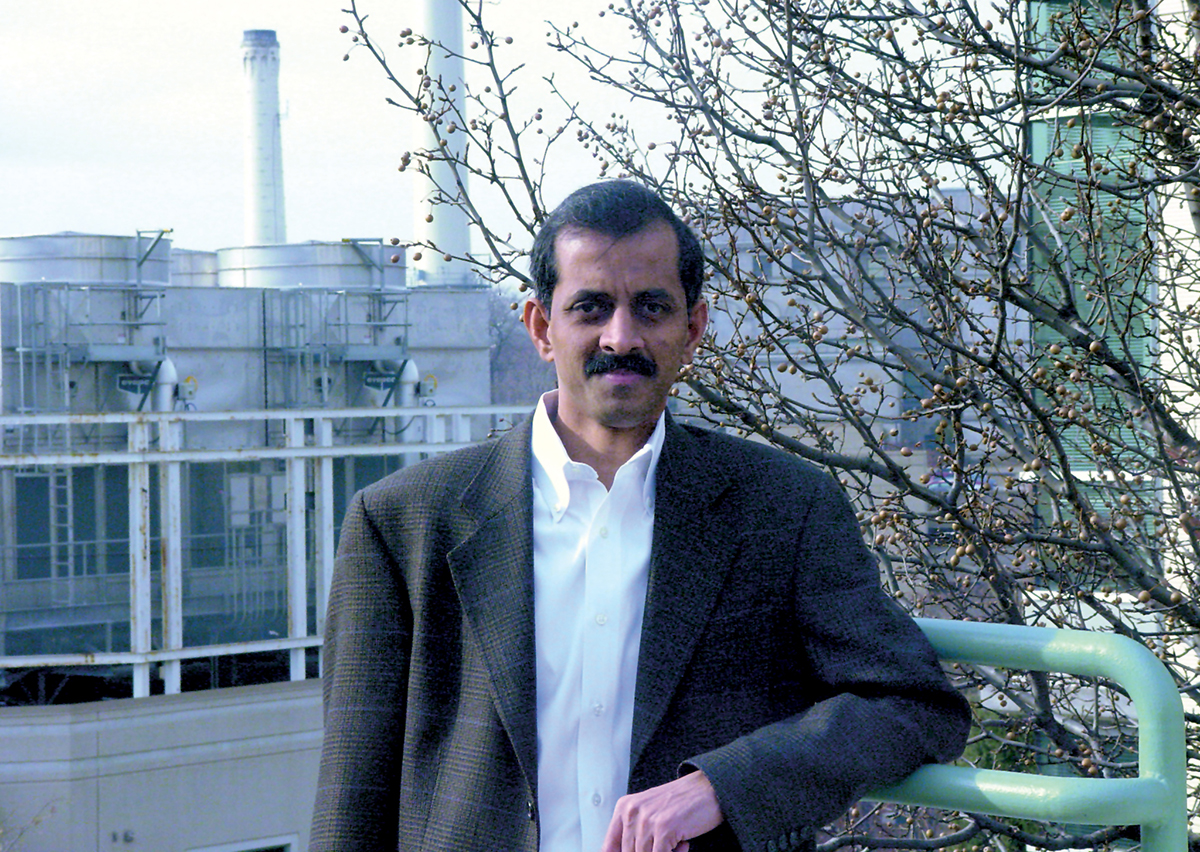

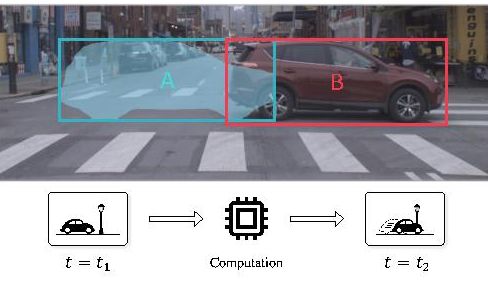

Carnegie Mellon University computer scientists and mathematicians have resolved the last, stubborn piece of Keller's conjecture, a geometry problem that scientists have puzzled over for 90 years. By structuring the puzzle as what computer scientists call a satisfiability problem, the researchers put the problem to rest with four months of frenzied computer programming and just 30 minutes of computation using a cluster of computers. "I was really happy when we solved it, but then I was a little sad that the problem was gone," said John Mackey, a teaching professor in the Computer Science Department (CSD) and Department of Mathematical Sciences who had pursued Keller's conjecture since he was a grad student 30 years ago. "But then I felt happy again. There's just this feeling of satisfaction." The solution was yet another success for an approach pioneered by Marijn Heule, an associate professor of computer science who joined CSD last August. Heule has used an SAT solver — a computer program that uses propositional logic to solve satisifiability (SAT) problems — to conquer several hoary math challenges, including the Pythagorean triples problem and Schur number 5. "The problem has intrigued many people for decades, almost a century," Heule said of Keller's conjecture. "This is really a showcase for what can be done now that was not possible previously." The conjecture, posed by German mathematician Eduard Ott-Heinrich Keller, has to do with tiling — specifically, how to cover an area with equal-size tiles without any gaps or overlap. The conjecture is that at least two of the tiles will have to share an edge and that this is true for spaces of every dimension. It's easy to prove that it's true for two-dimensional tiles and three-dimensional cubes. As of 1940, the conjecture had been proven true for all dimensions up to six. In 1990, however, mathematicians proved that it doesn't work at dimension 10 or above. That's when Keller's conjecture captured the imagination of Mackey, then a student at the University of Hawaii. With an office next to the university's computing cluster, he was intrigued because the problem could be translated, using discrete graph theory, into a form that computers could explore. In this form, called a Keller graph, researchers could search for "cliques" — subsets of elements that connect without sharing a face, thus disproving the conjecture. In 2002, Mackey did just that, discovering a clique in dimension eight. By doing so, he proved that the conjecture fails at that dimension and, by extension, in dimension nine. That left the conjecture unresolved for dimension seven. When Heule arrived at CMU from the University of Texas last year, he already had a reputation for using the SAT solver to settle long-standing open math problems. "I thought to myself, maybe we can use his technique," Mackey recalled. Before long, he began discussing how to use the SAT solver on Keller's conjecture with Heule and Joshua Brakensiek, a double major in mathematical sciences and computer science who is now pursuing a Ph.D. in computer science at Stanford University. An SAT solver requires structuring the problem using a propositional formula — (A or not B) and (B or C), etc. — so the solver can examine all of the possible variables for combinations that will satisfy all of the conditions. "There are many ways to make these translations, and the quality of the translation typically makes or breaks your ability to solve the problem," Heule said. With 15 years of experience, Heule is adept at performing these translations. One of his research goals is to develop automated reasoning so this translation can be done automatically, allowing more people to use these tools on their problems. Even with a high quality translation, the number of combinations to be checked in dimension seven was mind-boggling — a number with 324 digits — with a solution nowhere in sight even with a supercomputer. But Heule and the others applied a number of tricks to reduce the size of the problem. For instance, if one data configuration proved unworkable, they could automatically reject other combinations that relied on it. And since much of the data was symmetrical, the program could rule out mirror images of a configuration if it reached a dead end in one arrangement. Using these techniques, they reduced their search to about a billion configurations. They were joined in this effort by David Narvaez, a Ph.D. student at the Rochester Institute of Technology, who was a visiting researcher in the fall of 2019. Once they ran their code on a cluster of 40 computers, they finally had an answer: the conjecture is true in dimension seven. "The reason we succeeded is that John has decades of experience and insight into this problem and we were able to transform it into a computer-generated search," Heule said. The proof of the result is fully calculated by the computer, Heule said, in contrast to many publications that combine computer-checked portions of a proof with manual write-ups of other portions. That makes it difficult for readers to understand, he noted. The computer proof for the Keller solution contains all aspects of the solution, including a symmetry-breaking portion contributed by Narvaez, Heule emphasized, so that no aspect of the proof needs to rely on manual effort. "We can have real confidence in the correctness of this result," he said. A paper describing the resolution by Heule, Mackey, Brakensiek and Narvaez won a Best Paper award at the International Joint Conference on Automated Reasoning in June. Solving Keller's conjecture has practical applications, Mackey said. Those cliques that scientists look for to disprove the conjecture are useful in generating nonlinear codes that can make the transmission of data faster. The SAT solver thus can be used to find higher dimensional nonlinear codes than previously possible. Heule recently proposed using the SAT solver to tackle an even more famous math problem: the Collatz conjecture. In this problem, the idea is to pick any positive whole number and divide by 2 if it's an even number or multiply by 3 and add 1 if it's an odd number. Then apply the same rules to the resulting number and each successive result. The conjecture is that the eventual result will always be 1. Solving Collatz with the SAT solver "is a long shot," Heule acknowledged. But it is an aspirational goal, he added, explaining that the SAT solver might be used to resolve a number of less-intimidating math problems even if Collatz proves unattainable.