CMU Celebrates Picturephone’s 50th Anniversary By Recreating Historic First Call

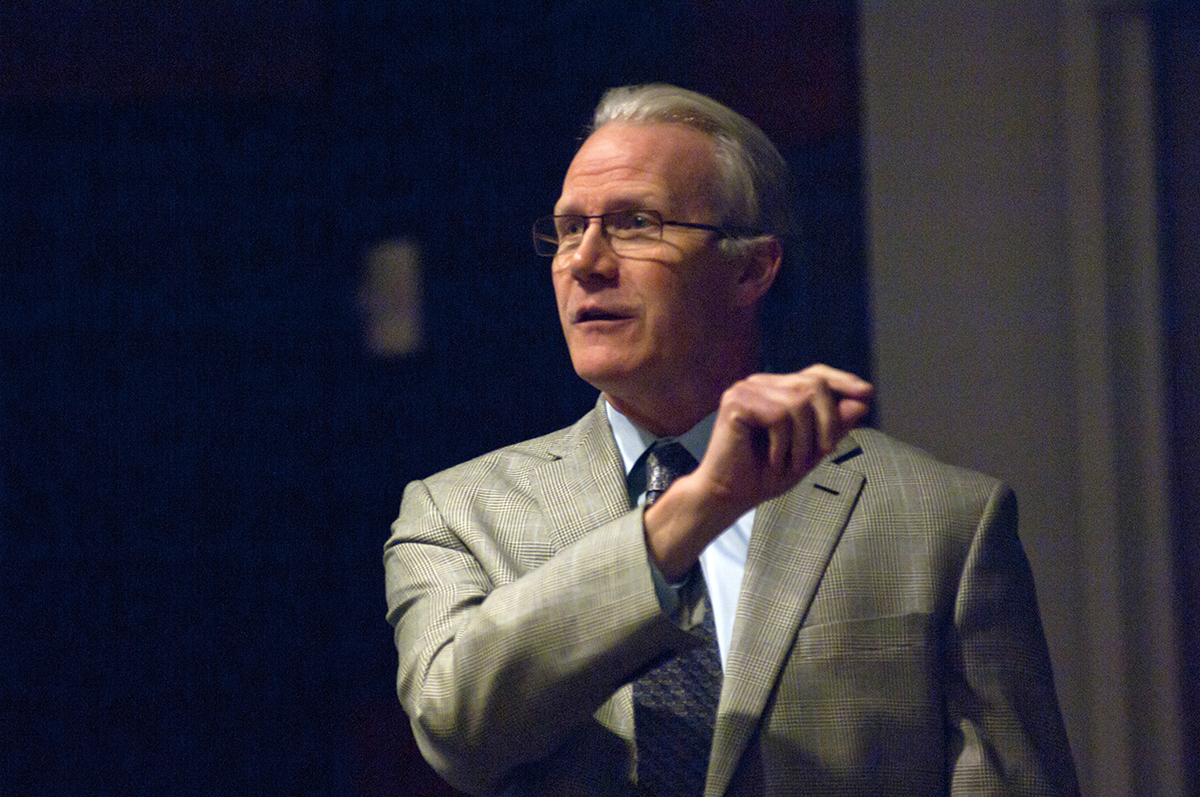

On June 30, 1970, AT&T’s Mod II Picturephone was publicly demonstrated for the first time in Pittsburgh, with then-Pittsburgh Mayor Pete Flaherty speaking "face-to-face" with the former chair of Alcoa, John Harper. Thus began commercial video calling service.To celebrate the event's 50th anniversary, Carnegie Mellon University's School of Computer Science and University Libraries collaborated on June 30 to recreate the call. Pittsburgh Mayor William Peduto and Alcoa Chair Michael G. Morris spoke via Zoom, just as their predecessors did a half century ago via the Mod II."I would not have guessed a year ago that this would become such a commonplace way of communicating," Peduto said via Zoom from the City-County building in Downtown Pittsburgh. "The events of the past four months have reinforced just how essential this technology is."Morris said that Alcoa recently held its annual shareholder meeting virtually rather than in-person due to the global pandemic, and praised the convenience of videoconferencing."For the generation that grew up with this, they think the technology is natural," Peduto said. "But imagine fifty years ago. Ten years ago I was still tripping over that phone cord in my mom’s kitchen."Peduto said now he’s video conferencing on a daily basis. He meets frequently with his own staff a few miles away, and with colleagues globally. "Being able to do so seamlessly during a time of a pandemic has made the ability to deliver critical services for the city able to continue."After the call reenactment, a panel including CMU’s Chris Harrison, Andrew Meade McGee and Molly Wright Steenson, considered the legacy and future of video."Besides modern video calls being in color, the end experience is remarkably unchanged," said Harrison, an assistant professor in the Human-Computer Interaction Institute. He recently updated an original set of Mod II Picturephones, which were purchased by CMU University Libraries. The modern computer and screen he installed allow the devices to be used for calls made via Skype, Zoom or FaceTime."That’s true also of the telephone, that the end experience is remarkably unchanged," Harrison said. "And I think that fifty years from now I would not be surprised if we still have telephones and videoconferencing. Those media are successful. What I think will happen is more media will be added."Molly Wright Steenson wondered if holograms will be a part of that future. Steenson, senior associate dean for research in the College of Fine Arts, said she admires the 1970s aesthetic of the Mod II, and pointed out the biggest difference in modern video calling is that it is mobile."You’re no longer calling from place to place, you're calling from a number that floats freely," said Andrew Meade McGee, a CLIR postdoctoral fellow in the History of Science and Computing in the University Libraries. "The hardware of the Mod II is replaced by software you can take anywhere, connecting people regardless of where they are."A video of the event is available on YouTube.