Live-Streamed Game Collects Sounds To Help Train Home-Based Artificial Intelligence

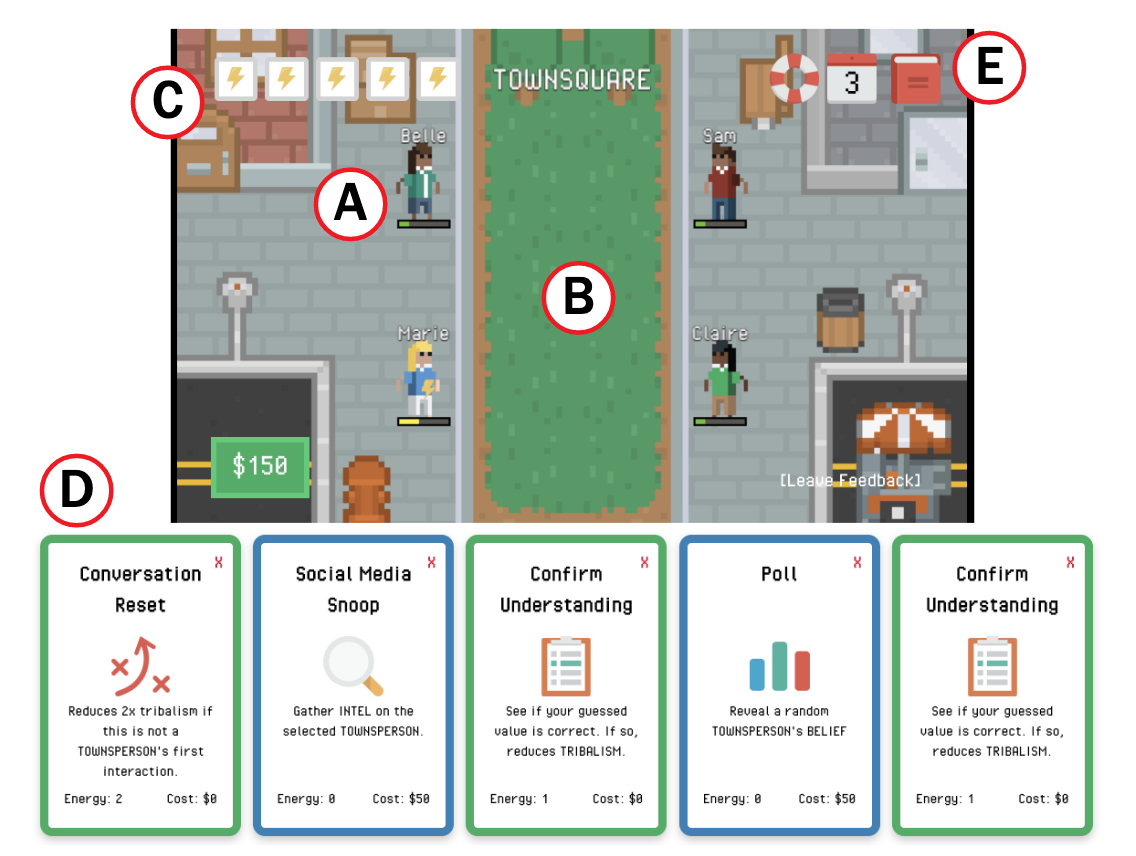

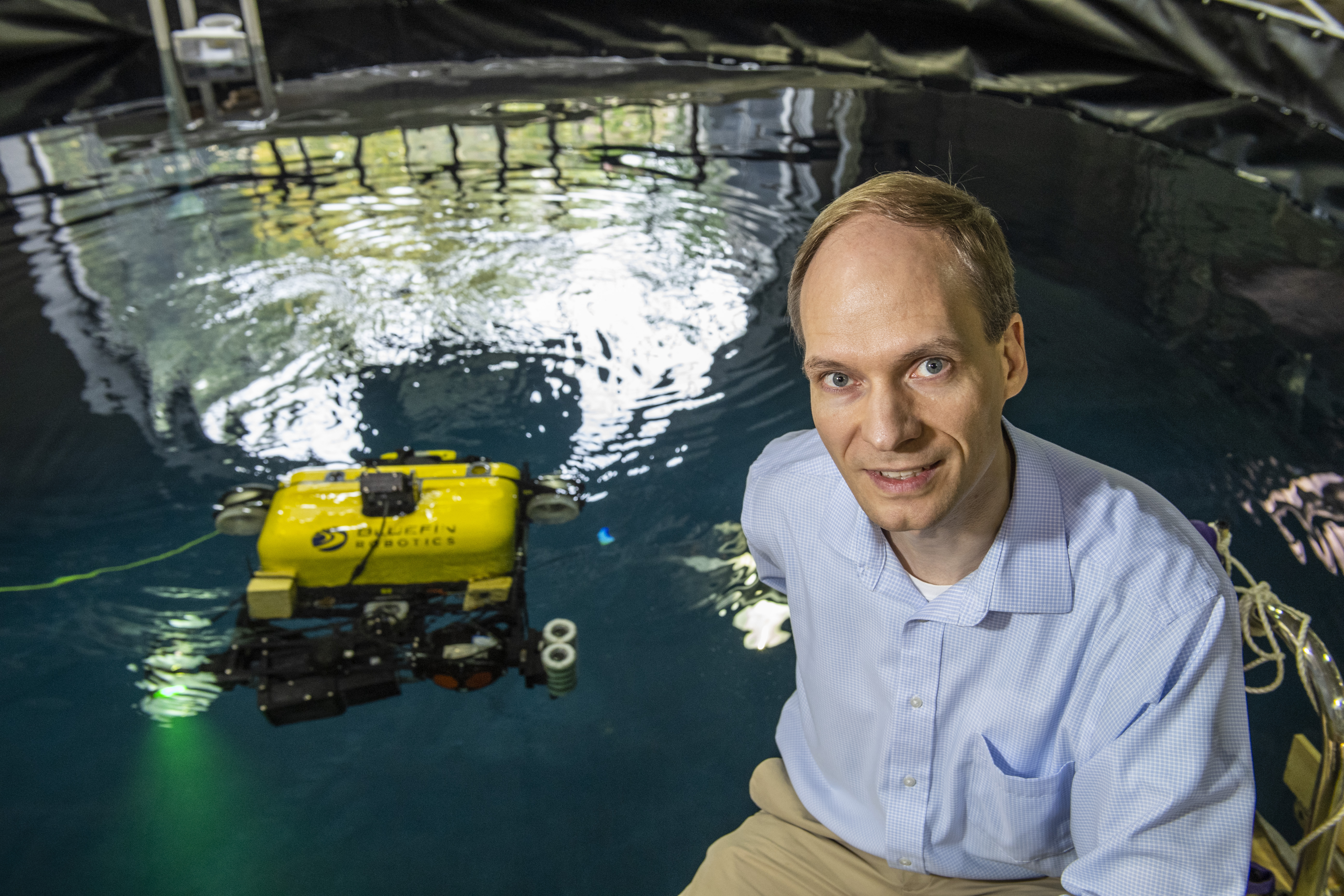

From yawning to closing the fridge door, a lot of sounds occur within the home. Such sounds could be useful for home-based artificial intelligence applications, but training that AI requires a robust and diverse set of samples. A video game developed by Carnegie Mellon University researchers leverages live streaming to collect sound donations from players that will populate an open-source database. "The methods for developing machine-learning-based interaction have become so accessible that now it's about collecting the right kind of data to create devices that can do more than just listen to what we say," said Nikolas Martelaro, an assistant professor in the School of Computer Science's Human-Computer Interaction Institute (HCII.) "We want these devices to use all the sounds in our environment to act." "This data could be used to create extremely useful technologies," said Jessica Hammer, the Thomas and Lydia Moran Assistant Professor of Learning Science in the HCII and the Entertainment Technology Center. "For example, if AI can detect a loud thud coming from my daughter's room, it could wake me up. It can notify me if my dryer sounds different and I need to change the lint trap, or it can create an alert if it hears someone who can't stop coughing." Hammer and her team developed the game, "Rolling Rhapsody," specifically to be played on the live-streaming platform Twitch. The streamer controls a ball, which they must roll around to collect treasure scattered about a pirate stronghold. Viewers contribute to the game by collecting sounds from their homes using a mobile app. "When they submit sounds, they are donating them to the database for researchers to use, but those sounds are also used as a part of the game on the live stream, incentivizing viewers with rewards and recognition for collecting many sounds or unique sounds," Hammer said. "Twitch reaches populations that might not otherwise be engaged in this kind of social good," said Hammer of the platform, which has become even more popular during the current novel coronavirus pandemic. "And Twitch is already used for donations, which is the case with charity streams. We thought we could leverage that strong culture of generosity to say, 'It's OK if you don't have any money. You can donate sounds from your home to help science.'" Hammer noted that following successful live play-testing, a broader field test will be conducted later this summer. "We can use this as a proof of concept for a new kind of game experience that can result in ethical data collection from the home," Hammer said. Privacy is paramount, and all players and viewers must opt in and provide consent to upload sounds. Additional privacy measures have also been taken, including opportunities for viewers to redact sound files that may have accidentally captured something personal. They can delete submissions, choose to store sounds locally and withdraw their consent at any time. "We can collect data in a way that's fun and feels good for everybody involved," Hammer said. If the research team finds that their method collects different data than traditional crowd-sourced experiences, they can begin to study how this technique generalizes to other kinds of problems. "This research doesn't have to be limited to gathering audio data for the home. A simple extension is gathering other kinds of audio data. Then you can use the same game, just change the kinds of challenges you give the players," Hammer said. The research is sponsored by Philips Healthcare and Bosch, and is part of Polyphonic — a larger project that includes an application for sound labeling and validation, and an interface where researchers can view and download sounds.