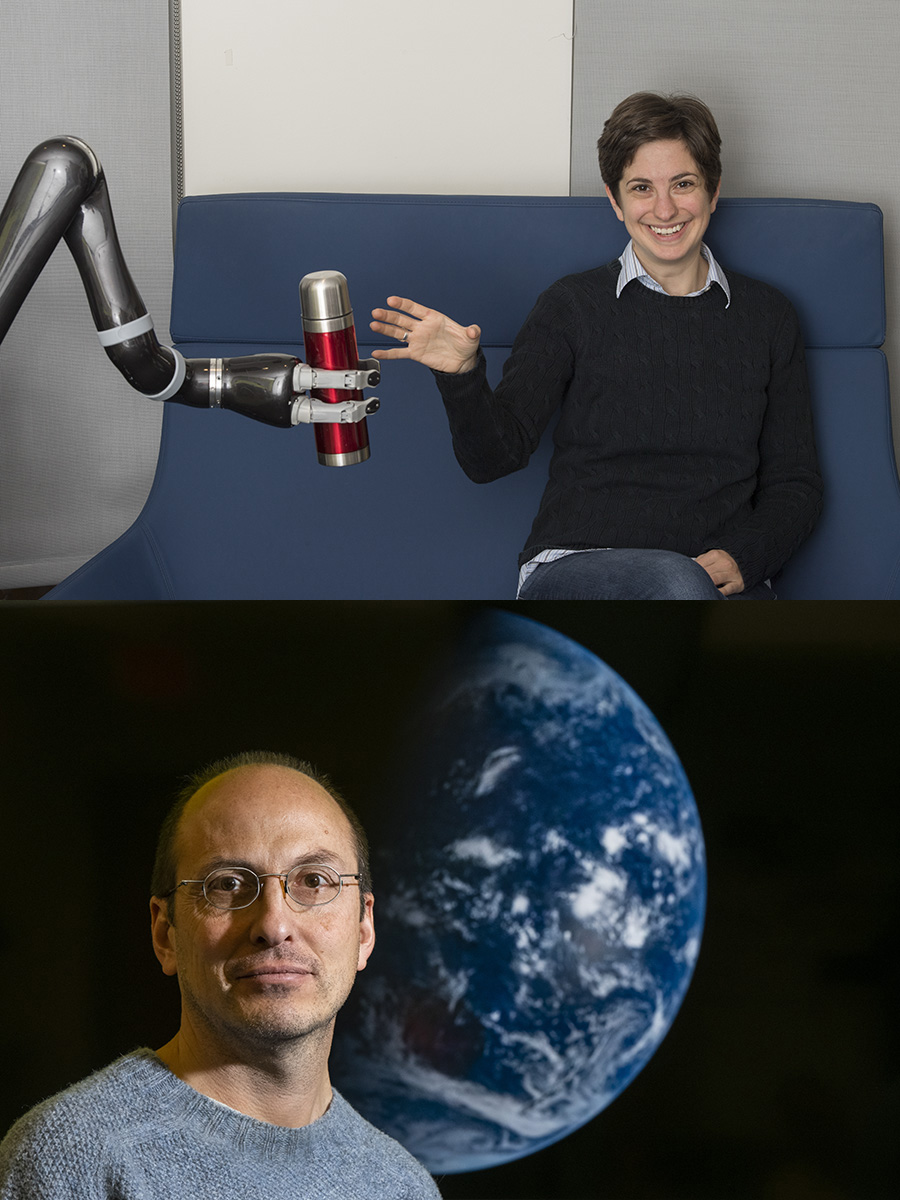

Noam Brown Named MIT Technology Review 2019 Innovator Under 35

Noam Brown, a Ph.D. student in the Computer Science Department who helped develop an artificial intelligence that bested professional poker players, has been named to MIT Technology Review's prestigious annual list of Innovators Under 35 in the Visionary category. Brown worked with his advisor, Computer Science Professor Tuomas Sandholm, to create the Libratus AI. It was the first computer program to beat top professional poker players at Heads-Up, No-Limit Texas Hold'em. During the 20-day "Brains vs. Artificial Intelligence" competition in January 2017, Libratus played 120,000 hands against four poker pros, beating each player individually and collectively amassing more than $1.8 million in chips. Unlike other games that computers have mastered, such as chess and Go, poker is an imperfect information game — one where players can't know exactly what cards their opponents have. That adds a layer of complexity to the game, necessitating bluffing and other strategies. Technology Review notes that some of Libratus' unorthodox strategies, such as dramatically upping the ante of small pots, have begun to change how pros play poker. More significantly, many real-world situations resemble imperfect information games. Brown and Sandholm maintain that AIs similar to Libratus could provide automated solutions for real-world strategic interactions, including business negotiations, cybersecurity and traffic management. Last year, Brown and Sandholm received the Marvin Minsky Medal from the International Joint Conference on Artificial Intelligence (IJCAI) in recognition of this outstanding achievement in AI. They also earned a best paper award at the 2017 Neural Information Processing Systems conference, the Allen Newell Award for Research Excellence and multiple supercomputing awards for their efforts. Brown, who will defend his Ph.D. thesis in August, is now a research scientist at Facebook AI Research. "MIT Technology Review's annual Innovators Under 35 list is a chance for us to honor the outstanding people behind the breakthrough technologies of the year that have the potential to disrupt our lives," said Gideon Lichfield, the magazine's editor-in-chief. "These profiles offer a glimpse into what the face of technology looks like today as well as in the future." Information about this year's honorees is available on the MIT Technology Review website and in the July/August print magazine, which hits newsstands worldwide on Tuesday, July 2.